Guardians of AI: Dr Andrew Hutson Of QFlow Systems On How AI Leaders Are Keeping AI Safe, Ethical, Responsible, and True

AI should benefit society broadly, not just a few entities. We actively support the development and use of open-source models, ensuring transparency in their architecture and accessibility for all. By leveraging and contributing to these open platforms, we enable innovations that are both ethical and widely available. For example, we’ve built tools that integrate seamlessly with leading open-source LLM frameworks, empowering organizations of all sizes to harness the benefits of AI without compromising security or affordability.

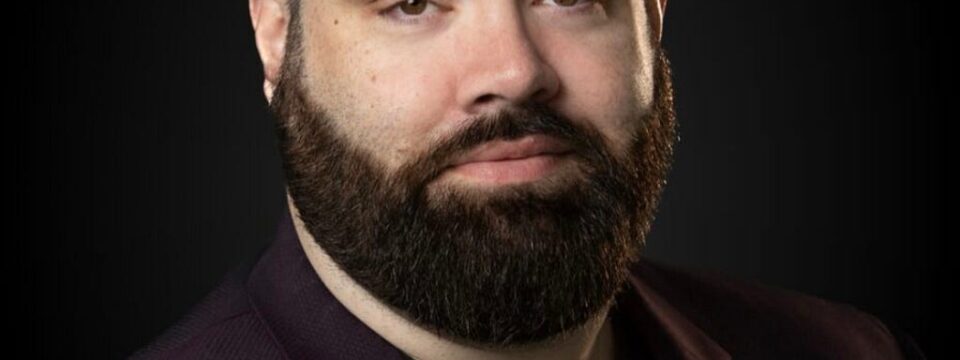

As AI technology rapidly advances, ensuring its responsible development and deployment has become more critical than ever. How are today’s AI leaders addressing safety, fairness, and accountability in AI systems? What practices are they implementing to maintain transparency and align AI with human values? To address these questions, we had the pleasure of interviewing Dr. Andrew Hutson.

Dr. Andrew Hutson holds a PhD in Informatics from the University of Missouri and has extensive experience building and deploying predictive models, recommendation engines, and SaaS products. Currently, he is leading efforts to integrate private large language models with knowledge graphs for public sector applications, addressing challenges like data privacy and ethical AI use.

Dr. Hutson is a thought leader in AI ethics, as demonstrated by his recent presentation on how legal counsel, record managers, and IT leaders can avoid common pitfalls in generative AI strategies. With a deep understanding of aligning advanced technologies with human values, he offers a unique perspective on ensuring AI systems remain safe, ethical, and responsible. This series provides a platform to share his expertise and contribute to the global dialogue on ethical AI innovation.

Thank you so much for your time! I know that you are a very busy person. Before we dive in, our readers would love to “get to know you” a bit better. Can you tell us a bit about your ‘backstory’ and how you got started?

My journey into AI began during my college years, driven by both necessity and curiosity. As a student on merit and athletic scholarships, balancing football with dual degrees in economics and political science was no small feat. It became essential for me to be exceptionally strategic with my time. This challenge sparked an early fascination with finding ways to synthesize vast amounts of information efficiently.

I began experimenting with techniques to analyze data and streamline my assignments. These small-scale “personal productivity experiments” laid the groundwork for what would become a career in informatics and AI. During my PhD studies at the University of Missouri, I had the opportunity to apply this passion to the field of healthcare, particularly electronic medical records (EMRs). One memorable project was building a makeshift EMR system using Microsoft SharePoint for a free clinic in Columbia, Missouri. This experience taught me how to design systems that manage sensitive patient data securely and efficiently, a challenge that underscored the importance of privacy and security in data-driven solutions.

My PhD research further solidified my commitment to advancing informatics in ways that directly benefit healthcare. I worked on improving how data could be utilized to enhance clinical workflows while safeguarding patient confidentiality. These principles of data security, ethical design, and practical implementation have guided my work ever since.

Playing football taught me discipline, adaptability, and the importance of strategy — qualities I now apply to building AI systems that are both innovative and ethical. Today, my work focuses on developing private large language models augmented with knowledge graphs. These systems are designed not only to address public sector challenges but also to prioritize robust privacy protocols and domain-specific ethical considerations, ensuring that the solutions are impactful and responsible.

None of us can achieve success without some help along the way. Is there a particular person who you are grateful for, who helped get you to where you are? Can you share a story?

I’ve been fortunate to have many mentors, but one who truly stands out is Dr. Belden. He dedicated his career as a physician to improving patient interactions by ensuring the best information was available at the point of care. Working with him taught me invaluable lessons about uncovering the root causes of complex issues, identifying the foundational principles to design effective solutions, and sifting through vast amounts of data to find the right signal at the right time.

Dr. Belden’s mentorship extended beyond our professional relationship. He hired me full-time during graduate school, encouraged me to pursue my PhD, and even sat on my PhD panel. His guidance didn’t stop there — he introduced me to key leaders at Cerner, which ultimately helped me launch my first company.

The impact he had on my career is immeasurable, and I am forever grateful for his belief in me and his willingness to invest his time and knowledge. His influence continues to shape how I approach problem-solving and innovation in AI today.

You are a successful business leader. Which three character traits do you think were most instrumental to your success? Can you please share a story or example for each?

Curiosity:

From an early stage in my career, curiosity has driven me to explore and solve complex problems. Whether it was experimenting with data synthesis techniques in college or designing predictive models, I’ve always been eager to understand how systems work and how they can be improved. For example, while working on a project integrating knowledge graphs with AI, my curiosity led me to dive deep into ontological structures, which ultimately improved the system’s ability to surface relevant insights for public sector applications.

Resilience:

The journey of building a business and launching innovative AI systems comes with its fair share of setbacks. During my first startup, there were moments when technical hurdles seemed insurmountable, and we had to pivot our entire strategy. Staying focused, learning from failures, and adapting quickly were crucial to turning those challenges into opportunities.

Collaboration:

Transforming ideas into reality requires a team. This was a lesson I learned firsthand playing college football, where our team worked its way to the national championship. Players can be great individually, but it’s teams that win championships. Everyone had to play their part, and we all knew we couldn’t achieve success alone. That principle stuck with me and continues to guide my approach to leading teams in the AI space. Building collaborative environments where every member contributes meaningfully is key to turning innovative ideas into impactful outcomes.

Thank you for all that. Let’s now turn to the main focus of our discussion about how AI leaders are keeping AI safe and responsible. To begin, can you list three things that most excite you about the current state of the AI industry?

Mass Awareness of Generative AI Techniques:

It’s incredible to see how generative AI has become part of mainstream conversations. A decade ago, I was optimizing machine learning techniques on GPUs because they were more efficient than CPUs, and most people would glaze over if I talked about predicting allele expression for genetic conditions using these tools. Today, the tables have turned — people approach me to share the exciting applications they envision, from creative endeavors to problem-solving in their industries. This broad awareness is accelerating innovation at a pace we’ve never seen before.

The Spark of Imagination:

The growing awareness has ignited creativity in unexpected ways. People are not only sharing their ideas for positive applications of generative and predictive models but also engaging in critical discussions about potential pitfalls. This duality of exploration and caution has sparked deeper industry conversations — including this interview — about how we ensure AI remains safe, ethical, and impactful.

The Fusion of Human and Machine Collaboration:

We’re at a unique juncture where AI systems are no longer just tools but collaborative partners. Steve Jobs once described the bicycle as an invention that made humans the most efficient locomotive animals on the planet, and he likened the personal computer to a “bicycle for the mind.” I see predictive and generative models as the next iteration of that concept. More than a bicycle, a “multiplier for the mind”. These tools give us the potential to multiply our individual brain power by allowing us to prioritize our focus without sacrifice. However, like the personal computer, they also carry the risk of leaving some people behind. Striking a balance between advancing the technology and ensuring accessibility for all is both an exciting and challenging aspect of this industry’s evolution.

Conversely, can you tell us three things that most concern you about the industry? What must be done to alleviate those concerns?

Mass Awareness Without Understanding:

While I’m excited by the growing awareness of generative AI, I’m equally concerned that it isn’t accompanied by a widespread understanding of how these techniques actually function. This gap leads to both overestimations and underestimations of AI’s capabilities. People might trust AI too much in critical scenarios or dismiss its potential in transformative applications. To address this, we need robust education initiatives to demystify AI for the public and encourage informed interactions with these technologies.

Lack of Training Controls:

Another concern is the limited regulatory oversight around how companies train their models. The absence of standardized controls can result in models being trained on biased, unauthorized, or sensitive data, leading to ethical and legal challenges. It’s crucial to establish enforceable guidelines for model training, ensuring transparency, accountability, and adherence to ethical principles.

Privacy and Security Divide:

Generative AI’s accessibility comes with a hidden cost: the growing divide between those willing to forgo privacy and security to access these tools and those who prioritize safeguarding their information. This disparity could create a significant gap in access to technology. To mitigate this, we must develop privacy-preserving AI solutions that allow users to engage with these models without compromising their security, ensuring that the benefits of AI are inclusive and equitable.

As a CEO leading an AI-driven organization, how do you embed ethical principles into your company’s overall vision and long-term strategy? What specific executive-level decisions have you made to ensure your company stays ahead in developing safe, transparent, and responsible AI technologies?

Embedding ethical principles into our company’s vision starts with an uncompromising stance on data security and customer trust. We believe that our customers should be able to leverage the power of open-source large language models (LLMs) without worrying about their data being exposed or misused. That’s why we prioritize local LLM hosting, ensuring that sensitive data never leaves the customer’s environment and is never shared with third parties.

One of our core principles is that every second spent organizing and interacting with company data should enhance the overall reliability and efficacy of LLM outputs. This means our systems are designed to not only deliver results but also improve over time by aligning outputs with the unique context of each organization. To achieve this, we’ve adopted privacy-first architectures and implemented mechanisms like fine-tuning models within secure, local environments, keeping customer data fully under their control.

At an executive level, I’ve championed a commitment to open-source solutions, giving customers transparency and flexibility in how they use AI. Additionally, we’ve invested in tooling and processes that help organizations organize their data more effectively, creating a foundation for trustworthy and actionable AI insights. By aligning our technical practices with ethical imperatives, we ensure that safety, transparency, and responsibility remain at the heart of everything we build.

Have you ever faced a challenging ethical dilemma related to AI development or deployment? How did you navigate the situation while balancing business goals and ethical responsibility?

Our biggest ethical dilemma arose when deciding how to provide the power of LLMs to our customers. As we examined how other cutting-edge technology companies approached LLM integration, the pattern was clear: nearly everyone was relying on AI solutions from major players like OpenAI. This model, while convenient, required sending customer data to third-party platforms.

We had a choice to make: should we follow this established approach for its simplicity, or should we prioritize customer privacy, even if it meant a more complex and expensive development process? Ultimately, we chose to listen to our customers. Many of them were actively avoiding LLMs, not because they didn’t see the value, but because they couldn’t trust the privacy and security of their data if it had to be sent to a third party.

That feedback made the decision straightforward. By committing to local LLM hosting and ensuring no customer data ever leaves their environment, we were able to solve a real problem. While it required additional investment and technical innovation on our part, this approach aligned perfectly with our ethical principles and reinforced our mission to deliver safe, transparent, and responsible AI solutions tailored to our customers’ needs.

Many people are worried about the potential for AI to harm humans. What must be done to ensure that AI stays safe?

It’s difficult to address this question comprehensively because much of the conversation is clouded by speculative opinions, hype, and sensationalism. The first step is to separate fact from fiction — distinguishing realistic concerns from exaggerated fears. Only then can we focus on actionable solutions.

Once we agree on a set of realistic threats that AI could introduce — such as bias amplification, data misuse, or unintended autonomous decision-making — we can work collaboratively to address them. This requires close coordination between technologists, lawmakers, and law enforcement to ensure that the solutions effectively mitigate risks without stifling innovation or infringing on individual freedoms.

For example, data privacy must be a cornerstone of safe AI design. Local hosting of LLMs, as we’ve implemented, ensures that sensitive data never leaves the customer’s control. Similarly, robust governance frameworks and ethical oversight can ensure that AI systems are transparent and accountable.

Ultimately, safeguarding AI is not just a technical challenge but also a societal one. By fostering collaboration across disciplines and maintaining a balanced approach, we can mitigate risks while preserving the immense potential AI has to offer.

Despite huge advances, AIs still confidently hallucinate, giving incorrect answers. In addition, AIs will produce incorrect results if they are trained on untrue or biased information. What can be done to ensure that AI produces accurate and transparent results?

The challenge of poor reliability and confident hallucinations in AI stems largely from the models being trained on vast amounts of generic data, most of which lacks relevance or context for specific use cases. Third-party AI solutions exacerbate this issue by failing to align with the specific knowledge and needs within an organization.

To address this, we’ve implemented a targeted approach that combines local hosting of LLMs with innovative techniques like Retrieval-Augmented Generation (RAG). Our models are reinforced with company-specific data, ensuring the information they generate is accurate and contextually relevant. At the core of our solution is a graph database that effortlessly builds ontological relationships tailored to the company’s unique needs. This Knowledge Graph acts as a backbone, enhancing the LLM’s ability to retrieve and generate precise information while reducing the likelihood of hallucinations.

By combining LLMs with Knowledge Graphs, we’ve found a solution that significantly improves reliability and transparency. This architecture not only grounds the AI in domain-specific knowledge but also allows us to continuously refine and expand the system as advancements are made in both graph database technologies and AI models.

Ensuring accuracy and transparency is an ongoing effort, and we are committed to staying at the forefront of these developments to deliver trustworthy and effective AI solutions for our customers.

Based on your experience and success, what are your “Five Things Needed to Keep AI Safe, Ethical, Responsible, and True”?

- Privacy-First Design:

AI systems must embed privacy at their core. For instance, we decided to prioritize local LLM hosting for all our solutions. This ensures that sensitive customer data never leaves their environment, addressing concerns about data exposure with third-party providers. For example, one client in the healthcare sector was hesitant to adopt generative AI until they saw how our approach guaranteed data remained entirely within their control. This not only solved a real problem for them but also demonstrated the tangible value of privacy-first systems. - Traceability:

For many, AI is still a black box. Understanding the probabilities inherent in generative transformers or the logic that leads to specific outputs can be daunting. That’s why we focus on making AI decision trees transparent, auditable, and improvable. For example, in our enterprise solutions, we provide clear pathways showing how each decision or suggestion was generated, allowing users to trust and refine the system. This traceability is crucial for enterprise adoption, where accountability and the ability to explain outcomes are non-negotiable. - Support for Open-Source Development:

AI should benefit society broadly, not just a few entities. We actively support the development and use of open-source models, ensuring transparency in their architecture and accessibility for all. By leveraging and contributing to these open platforms, we enable innovations that are both ethical and widely available. For example, we’ve built tools that integrate seamlessly with leading open-source LLM frameworks, empowering organizations of all sizes to harness the benefits of AI without compromising security or affordability. - Ethical Training Data:

The foundation of any AI model is the data it’s trained on, and right now, much of it is a mix of ethically sourced and questionable datasets. We advocate for regulations that protect intellectual property and require explicit opt-in consent for contributions to the global corpus of information. For example, in our own systems, we ensure that data sources are fully documented, with consent clearly established. This ethical foundation ensures our models are not only effective but also respectful of individual and intellectual rights. - Domain-Specific Knowledge Integration:

Generic AI often struggles to deliver accurate or relevant outputs because it lacks domain-specific context. By combining LLMs with Knowledge Graphs, we enhance relevance and accuracy while reducing hallucinations. For example, a manufacturing client used our solution to map their supply chain processes into a Knowledge Graph. This integration allowed the AI to provide grounded and actionable insights, aligning outputs with their specific needs and driving real-world value.

Looking ahead, what changes do you hope to see in industry-wide AI governance over the next decade?

Over the next decade, I hope to see a comprehensive and balanced approach to AI governance that prioritizes transparency, accountability, and inclusivity while fostering innovation. Here are the key changes I envision:

- Global Standards for Data Privacy and Security:

AI governance should establish clear, enforceable global standards for data privacy and security. This includes requiring explicit opt-in consent for data usage and protecting intellectual property. A unified approach would eliminate the ambiguity surrounding cross-border data sharing and give users greater control over their contributions to AI models. - Mandatory Traceability for AI Systems:

Every AI model should include built-in mechanisms for traceability, making decision paths and data sources auditable and understandable. This is particularly crucial for enterprise adoption, where accountability and transparency are essential. Governance frameworks must enforce these traceability requirements to ensure trust and reliability in AI systems. - Support for Open-Source Innovation:

Governments and organizations should invest in and support the development of open-source AI models. Open-source initiatives democratize access to advanced AI technologies, ensuring that smaller companies and underserved communities can participate in and benefit from the AI revolution. - Ethical Data Regulation:

Regulators must address the current gaps in how training data is sourced. This includes outlawing the use of data obtained without consent and creating mechanisms to allow individuals to opt-in to contribute their data. Such regulations would protect individual rights and ensure that AI models are built on ethically sourced datasets. - Interdisciplinary Governance:

AI governance bodies should include a diverse range of stakeholders — technologists, ethicists, policymakers, and community representatives — to address the multifaceted challenges AI presents. This interdisciplinary approach would help align AI development with societal values while mitigating unintended consequences.

What do you think will be the biggest challenge for AI over the next decade, and how should the industry prepare?

The biggest challenge for AI over the next decade will be managing the balance between rapid innovation and equitable, ethical adoption. To address this, the industry must tackle several interconnected issues:

- Data Privacy and Training Data Quality:

The quality of training data is foundational to AI reliability and safety. Many current datasets mix ethical and questionable sources, leading to biases and inaccuracies. The industry must prioritize using ethically sourced, high-quality data while ensuring robust privacy protections, such as explicit opt-in mechanisms for data sharing. Governance should also emphasize transparency in how data is curated and used. - Access to Pre-Trained Models:

Access to high-performing, pre-trained models is essential for democratizing AI. Open-source initiatives should be expanded to ensure that smaller organizations and underserved communities can leverage advanced models without facing prohibitive costs. These pre-trained models should also be optimized for specific use cases, making them more practical for a wide range of industries. - Education to Use the Models:

AI’s potential can only be fully realized if users understand how to use these tools effectively. Educational programs that focus on teaching individuals and organizations how to integrate, fine-tune, and interpret AI systems will be critical. This includes not just technical skills but also fostering an understanding of AI’s limitations, risks, and ethical considerations. - Affordable Hardware to Run Models:

The hardware required to deploy and run AI models is often a barrier for smaller organizations. The industry must focus on developing cost-efficient, high-performance hardware solutions to make AI accessible to more users. Innovations in hardware optimization and cloud infrastructure will play a vital role in lowering these barriers. - Building Trust in AI:

The black-box nature of many AI systems erodes trust, particularly in high-stakes applications. Addressing this requires enhancing explainability, traceability, and reliability. Transparent decision-making processes, combined with strong governance frameworks, will ensure users feel confident in deploying AI solutions.

You are a person of great influence. If you could inspire a movement that would bring the most good to the most people, what would that be? You never know what your idea can trigger. 🙂

The information age has brought incredible opportunities, but it has also introduced two significant challenges: a widening gap between those with technological skills and those without, and the sheer overwhelm caused by the volume, veracity, velocity, and variety of information we are asked to synthesize as humans. I want to fix that.

My vision for the future of AI is a world where recalling and incorporating the combination of personal, group, societal, and global information into any decision is incredibly easy — barely an inconvenience. I see a future where AI empowers every individual to operate at the cognitive level of Bradley Cooper’s character in Limitless. Imagine every person having seamless access to the insights and tools needed to make the best possible decisions, without being burdened by the complexities of data management or analysis.

To achieve this, we must build the right combination of tools and innovative experiences — ones that amplify human potential, rather than replace it. These tools must be accessible, ethical, and designed to close the skill gap, not widen it. If we can get that right, we’ll create a world where knowledge and creativity are no longer constrained, and humanity can truly reach its full potential — a world without limit.

How can our readers follow your work online?

Readers can follow our work online by visiting our website at qflow.com and connecting with us on LinkedIn. To connect with me, please find me on LinkedIn.

Thank you so much for joining us. This was very inspirational.

Guardians of AI: Dr Andrew Hutson Of QFlow Systems On How AI Leaders Are Keeping AI Safe, Ethical… was originally published in Authority Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.